👋Hey friends,

A few weeks ago, a founder gave me a demo of his new AI startup.

Beautiful dashboard. Clean interface. Dozens of integrations.

When he finished, I asked one question:

“What’s the one workflow your users can’t live without?”

He paused. Then smiled a little too long.

That silence told me everything.

It reminded me how easy it is to believe that success in AI comes from building more — more features, more models, more hype — when in reality, it comes from making one small thing work so reliably that people build their habits around it.

Every lasting AI product — from Jasper to Rewind to Notion — started with a single workflow that worked just a little better than expected. Then they doubled down, learned fast, and scaled slowly.

That’s what separates products that grow from demos that fade.

So today’s issue is all about that: the discipline of starting small, learning fast, and building deep.

In today’s edition, we’ll explore:

Why small, focused experiments beat big ambitions.

How narrowing your wedge accelerates learning and adoption.How to turn one clever AI idea into a repeatable workflow.

The process of transforming a working prompt into a system that runs (and improves) on its own.Why scaling learning matters more than scaling code.

The simple framework top AI teams use to compound insight over time.Common Pitfalls When Scaling AI Products.

The four mistakes that quietly kill momentum — and how to avoid them before they derail your loop.The Macro Shift — From Tools to Ecosystems.

Why the future belongs to adaptive systems that learn faster than competitors can copy them.

Let’s dive in.

— Naseema Perveen

IN PARTNERSHIP WITH ROKU

Shoppers are adding to cart for the holidays

Over the next year, Roku predicts that 100% of the streaming audience will see ads. For growth marketers in 2026, CTV will remain an important “safe space” as AI creates widespread disruption in the search and social channels. Plus, easier access to self-serve CTV ad buying tools and targeting options will lead to a surge in locally-targeted streaming campaigns.

Read our guide to find out why growth marketers should make sure CTV is part of their 2026 media mix.

The Data: What the Numbers Reveal About Scaling AI

Before we get into frameworks, let’s ground this in what the data actually says about the current AI landscape.

Because behind every “success story” you see on LinkedIn, there are hundreds of quiet experiments that never make it out of beta.

The Production Gap

According to McKinsey’s 2025 State of AI,

42 percent of organizations abandoned most of their AI projects before they reached production.

Only 8 percent said their AI initiatives materially improved profitability.

The biggest bottleneck wasn’t model accuracy — it was integration and iteration.

In other words, ideas died in hand-offs, not in training runs.

The Pilot-to-Impact Problem

An MIT Sloan study covering 400 AI initiatives found that 95 percent of generative-AI pilots produced no measurable P&L impact.

Most stalled after the prototype phase because they lacked a repeatable workflow and feedback loop.

The lesson: a great demo is easy.

A dependable system is rare.

Trust as a KPI

Gartner Survey finds 60% of customer service agents fail to promote self-service.

Reliability, it turns out, is more valuable than brilliance.

What the Numbers Tell Us

Across all studies, one theme repeats:

AI success scales with feedback loops, not feature lists.

Teams that iterate weekly outperform those that release quarterly.

Products that collect feedback continuously build trust faster.

Narrow use cases produce deeper data, which compounds learning.

In short: learning velocity is the new growth metric.

Step 1 — Start Narrow, Then Deep

When technology feels infinite, focus becomes a competitive advantage.

Why narrow wins

AI products fail for two reasons: they try to solve too many problems, or they ignore feedback too long.

The most resilient AI startups did the opposite — they started painfully specific.

Jasper didn’t chase “AI for marketing.”

It targeted one group — content marketers drowning in blog deadlines — and promised a 10× speed boost.

Within months, users were generating five million words per day.

Runway didn’t launch as “the video AI platform.”

It began with background removal, collected millions of labeled frames, and used that precision to expand into generative video.

Even OpenAI followed this arc: GPT-2 was a research curiosity; GPT-3 became an API; ChatGPT productized that API with a single interface — one wedge that captured 100 million users faster than any product in history.

Specificity is the fastest path to general impact.

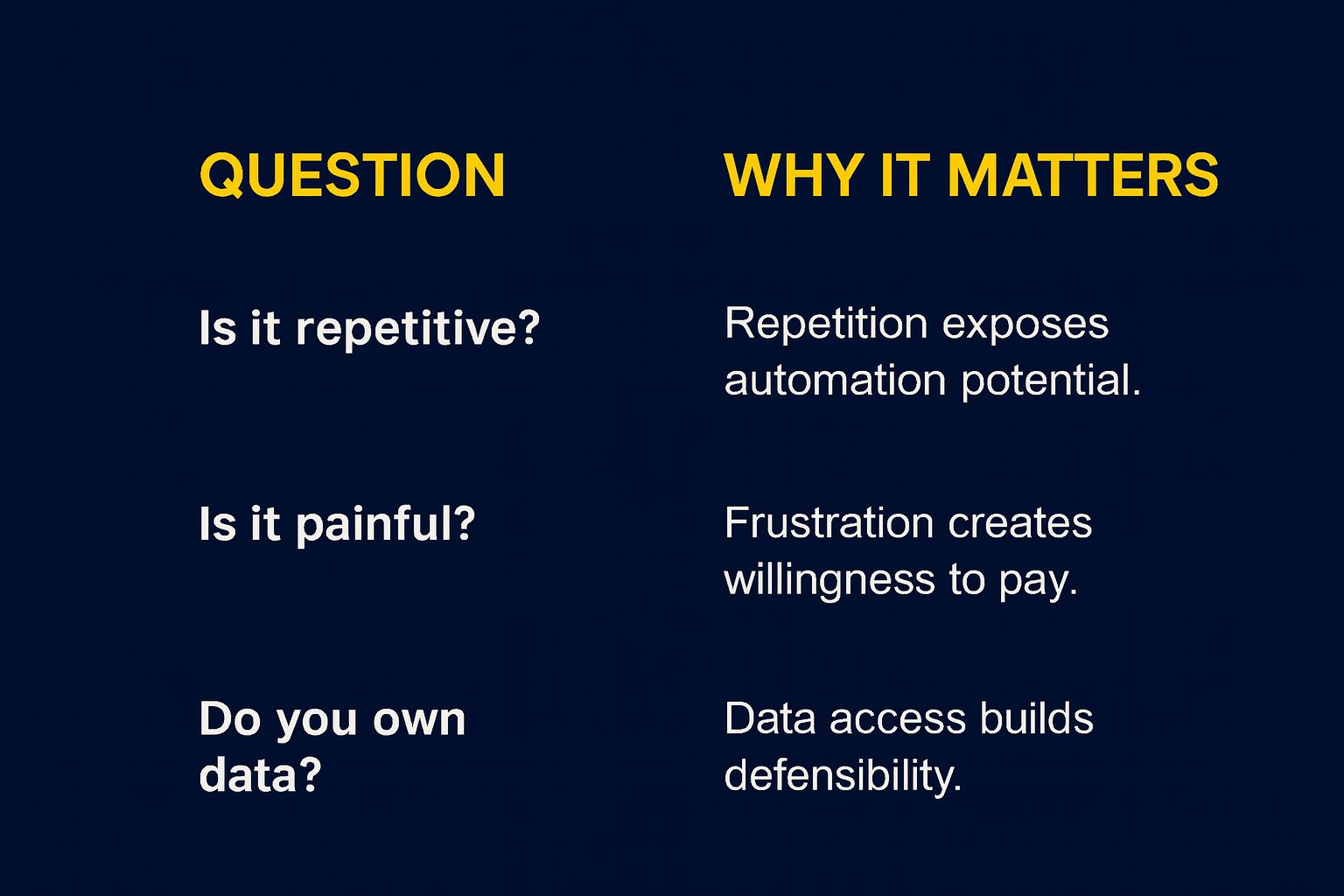

How to find your wedge

Ask yourself three questions:

Then run this:

W = Repetition × Frustration × Data Access

A marketing agency running daily reports? High score.

A legal team summarizing 300-page PDFs weekly? High score.

A novelist brainstorming once a month? Low score.

Start where the score peaks — not where glamour lives.

Case Snapshot — Relevance AI

Relevance AI began by summarizing survey responses.

The founders noticed customers rewriting those summaries into “themes.”

They automated that step — creating an insight discovery engine now used by hundreds of research teams.

Depth, not width, made them indispensable.

Go deep before you scale

Depth compounds.

A model tuned for a single workflow learns faster than a general one stretched across many.

That’s why OpenAI now fine-tunes GPTs for law, health, and code instead of enlarging a single giant model endlessly.

Every great product becomes great by being great at one small thing first.

So, instead of asking “How can we reach more users?” ask “How can we make this workflow unbreakably good?”

Once you’ve nailed that one loop, your next job is simple:

make it run without you.

Step 2 — Turn Insight Into Workflow

A clever prompt is a spark.

A workflow is a machine.

From demo to delivery

A designer in Berlin built a GPT prompt that rewrote UX copy in Apple’s tone.

Everyone loved it — but copy-pasting each sentence was tedious.

So she connected it to Figma through a small API script.

One button click, instant rewrite.

Within a week, 30 designers were using it. Within two months, it became a paid plugin.

That’s the leap: from manual cleverness to automated value.

The same pattern powers today’s biggest AI hits:

Zapier + GPT integrations turned one-off prompts into 24/7 automations.

Notion AI embedded intelligence directly inside user workflows instead of launching another app.

Rewind stitched its capture-index-retrieve loop so tightly that users forget they’re even using AI.

The Workflow Design Checklist

Define Input — Where does data come from? (Docs, CRM, APIs)

Automate Trigger — When does the process start? (Upload, schedule, event)

Process Intelligence — What transformation happens? (Summarize, detect, generate)

Deliver Output — Where is value consumed? (Slack, dashboard, email)

Collect Feedback — How do users correct or rate results?

If someone else can use it without your help, congratulations — you’ve built a product.

The human-in-the-loop principle

Full automation looks efficient on paper but breaks trust in reality.

Users want judgment, nuance, and veto power.

Grammarly suggests, it doesn’t overwrite.

Notion AI lets you edit freely.

That balance creates psychological safety — the gateway to adoption.

Automation builds efficiency; curation builds trust.

Mini-Case — Minutesly

A solo founder in Singapore connected Zoom transcripts to GPT via Slack.

At first, it was for his own team’s notes.

Six months later, his bot Minutesly was used by 40+ companies.

He didn’t invent a new model — he designed a repeatable, feedback-friendly workflow.

Visual Model — The 3-Layer AI Experiment Stack

Layer | Purpose | Key Question |

1 — Micro Experiment | Test prompt + task + context | Does it work once? |

2 — Workflow Layer | Automate + deliver value | Can it run without me? |

3 — Learning Layer | Collect feedback + adapt | Can it improve over time? |

This stack shifts you from building features to building feedback systems.

Avoid the “tool trap”

Many teams launch impressive tools that never fit user rhythm.

A workflow succeeds only when it disappears into daily routine.

Ask:

“Would anyone notice if this stopped working tomorrow?”

If the answer is yes, you’ve built something vital.

Here’s when it clicked for me: scaling isn’t about adding features — it’s about shortening the distance between input and learning.

Once your product can sense, respond, and adapt within the same loop, growth stops being a goal and becomes a by-product.

Step 3 — Scale Learning, Not Code

Scaling isn’t a race to add features; it’s a race to learn faster.

The Learning Velocity Formula

LV = Feedback Quality × Experiment Frequency × Reflection Speed

High-velocity teams evolve faster than high-budget ones.

Why learning beats features

Copy.ai noticed users trusted outputs they could edit.

Instead of more templates, they built an editable sandbox — usage doubled.

Rewind tracked when users re-queried the same topic — each patch improved retrieval.

Runway measured which generations users repeated — that data shaped its next model.

They weren’t guessing what worked; they were measuring it.

Build your feedback flywheel

Collect signals — log every input, output, and edit.

Reflect weekly — treat failures as experiments.

Systemize wins — automate recurring fixes.

Each micro-learning becomes a macro-advantage.

Scaling trust

Gartner found 68 percent of users stop using an AI tool because results feel unpredictable.

Reliability beats brilliance.

A system that fails gracefully builds more loyalty than one that dazzles inconsistently.

Design for predictability: transparent logs, human review, clear boundaries.

That’s why Copilot, Grammarly, and Notion AI keep growing even when imperfect.

Mini-Case — The Hippocratic Loop

Hippocratic AI limited its model to nurse triage to gather safe, structured feedback.

Those validated corrections became training data for its broader system.

Their motto: “Scale comprehension before competence.”

Practical Metric Toolkit

Measure learning the way you measure revenue.

Common Pitfalls When Scaling AI Products

Every ambitious builder eventually learns that scaling an AI product isn’t about adding more models — it’s about adding more understanding.

But before teams figure that out, they usually fall into one (or several) of these traps.

Let’s go through the big four — and what they look like in real life.

Too Many Prompts, Too Little Purpose

It’s tempting to believe that more prompts equal more value.

You start with one clever workflow, it works, and soon you’ve got a library of “cool ideas” that impress investors but confuse users.

The result? A product that looks wide but feels shallow.

This happens when builders confuse creativity with coherence.

Jasper succeeded not because it had 500 prompts — but because it perfected one, the long-form blog generator, before moving to others.

A clever prompt is a seed.

But without a workflow around it — automation, delivery, feedback, trust — it never grows roots.

Ask: “Does this prompt solve a recurring problem, or just showcase what AI can do?”

If it’s the latter, it’s not a product yet.

Skipping the Human-in-the-Loop

Automation looks efficient — until something goes wrong.

In early AI systems, full automation often led to silent errors. Emails sent to the wrong customers. Financial summaries missing critical data. Healthcare chatbots giving overconfident advice.

Each of these moments breaks trust faster than it saves time.

Human review may look like friction, but it’s actually quality assurance at scale.

The best AI products — from Grammarly to Notion AI — give users easy control. They let you edit, review, and override the system when necessary.

That small layer of human agency transforms fear into confidence.

Rule of thumb: If your users can’t tell where they can intervene, you’ve automated too much.

AI works best as an assistant, not a replacement.

Premature Scaling

This one kills more startups than anything else.

You find early traction in one use case — then rush to expand horizontally.

Suddenly, your data signals blur. Feedback becomes inconsistent. The product loses focus.

Scaling before your feedback loop stabilizes is like building a second floor before the foundation dries.

The team at Runway resisted that temptation. For nearly a year, they focused on perfecting background removal for videos before adding text-to-video generation. That narrow base gave them precision data that later fueled their expansion.

Ask before scaling: “Is our core loop stable, measurable, and improving week over week?”

If the answer isn’t a confident yes, you’re scaling noise — not learning.

Chasing Vanity Metrics

Growth numbers look great on a pitch deck — but they can distract from the truth.

High user sign-ups don’t mean users are learning with you.

Viral demos don’t mean sustainable retention.

In AI, the only metric that truly compounds is Learning Velocity — how fast your system improves based on real-world feedback.

Copy.ai’s early team learned this first-hand. Their biggest leap wasn’t from a viral launch, but from discovering that users who edited outputs were the ones who stayed. That insight changed their roadmap entirely.

Pro tip: Track improvement rate per user before total users. The former builds the latter.

And perhaps the most important reminder of all — one of my favorite quotes from Andrej Karpathy:

“In AI, most startups die of indigestion, not starvation — they try to automate everything before learning anything.”

The lesson: Don’t rush to scale complexity.

Scale understanding.

The Learning Velocity Flywheel

You already know the formula; here’s how to make it real:

Collect feedback intentionally. Track edits, revision time, satisfaction. Implicit data (re-queries, retries) tells the truth.

Analyze meaningfully. Tag issues — accuracy, tone, completeness, hallucination.

Act quickly. Ship micro-updates weekly. Speed > size.

Close the loop publicly. Tell users what changed. Transparency breeds trust.

Feedback → Reflection → Improvement → Communication → Feedback

That loop — not your model — is your moat.

The Macro Shift — From Tools to Ecosystems

Five years ago, software was static: we shipped, users adapted.

Now, AI systems adapt back.

Model quality is commoditizing.

Differentiation now comes from feedback architecture — how fast your system learns.

Every correction is a training example.

Every user is an unpaid teacher.

The next generation of AI PMs will behave less like engineers and more like behavior designers.

Their job: sculpt interactions that feed the learning loop.

“The next wave won’t out-model you — it’ll out-learn you.”

This is the rise of Learning Infrastructure: products that grow smarter every time they’re used.

What This Signals for 2026

We’re entering the Age of Adaptive Products.

Model access will be universal. APIs level the field.

Feedback loops will define moats.

Trust architecture will replace feature lists as the new marketing.

Learning velocity will decide valuation multiples.

As BCG put it: “Systems that personalize faster than competitors can copy them will dominate their categories.”

If the 2010s were about moving software to the cloud, the 2020s are about moving learning into the product itself.

Closing Reflection — The Real Game of Building with AI

When I look back at every product that truly lasted — Slack, Jasper, Notion, Rewind — none of them started with a masterplan. They began with curiosity.

They were small loops of learning — a single workflow, a single behavior, a single question that kept evolving.

Slack was born out of a failed game project.

Jasper started as a way for a few marketers to write faster.

Notion was built by two people who just wanted to design a workspace that didn’t make them miserable.

Each of these began with one repeatable loop that worked slightly better than expected — and they doubled down on it until it became magic.

That’s the quiet truth of AI right now.

Everyone’s chasing scale, but the real moat is learning velocity — how fast your system, your team, and your mindset can learn.

The market celebrates speed of launching.

But the winners move with speed of learning.

They don’t measure success by number of features shipped, but by number of insights integrated.

Every iteration teaches you something the model never could: what humans actually trust, what they ignore, what they retry, and where they hesitate.

That’s where the real product is — not in the prompt, not in the UI, but in the behavior change that keeps unfolding between you and your users.

If you zoom out, every enduring AI product has been built on one invisible equation:

Learning Velocity = Feedback Quality × Experiment Frequency × Reflection Speed.

Most teams obsess over the first two — collecting feedback and experimenting fast.

But the last one — reflection speed — is where great products separate from the rest.

Reflection is the pause between shipping and scaling.

It’s where you translate user behavior into product wisdom.

AI products don’t die because of bad code. They die because they stop listening.

If I were building an AI product from scratch today, I’d spend less time prompting and more time observing.

I’d find one recurring pain point.

Automate it just enough to help — but not enough to hide the edges.

And then I’d study every mistake it makes.

Because every mistake is a mirror.

It shows you how humans think, what they value, and what they’ll never fully outsource to machines.

AI isn’t replacing humans.

It’s revealing what makes humans indispensable: our curiosity, our context, our care.

Where This All Leads

Big things never start big.

They start precise.

They start with one working loop that refuses to stay small.

Start small.

Learn fast.

Scale trust.

Because the future of AI won’t belong to those who code the fastest —

It’ll belong to those who learn the fastest.

Those who listen harder, reflect sooner, and improve relentlessly.

That’s how tiny AI experiments become enduring products.

That’s how curiosity turns into legacy.

Keep your loop alive.

— Naseema

What’s Your Company’s Current AI Focus?

That’s all for now. And, thanks for staying with us. If you have specific feedback, please let us know by leaving a comment or emailing us. We are here to serve you!

Join 130k+ AI and Data enthusiasts by subscribing to our LinkedIn page.

Become a sponsor of our next newsletter and connect with industry leaders and innovators.